HAHA 2019 Competition

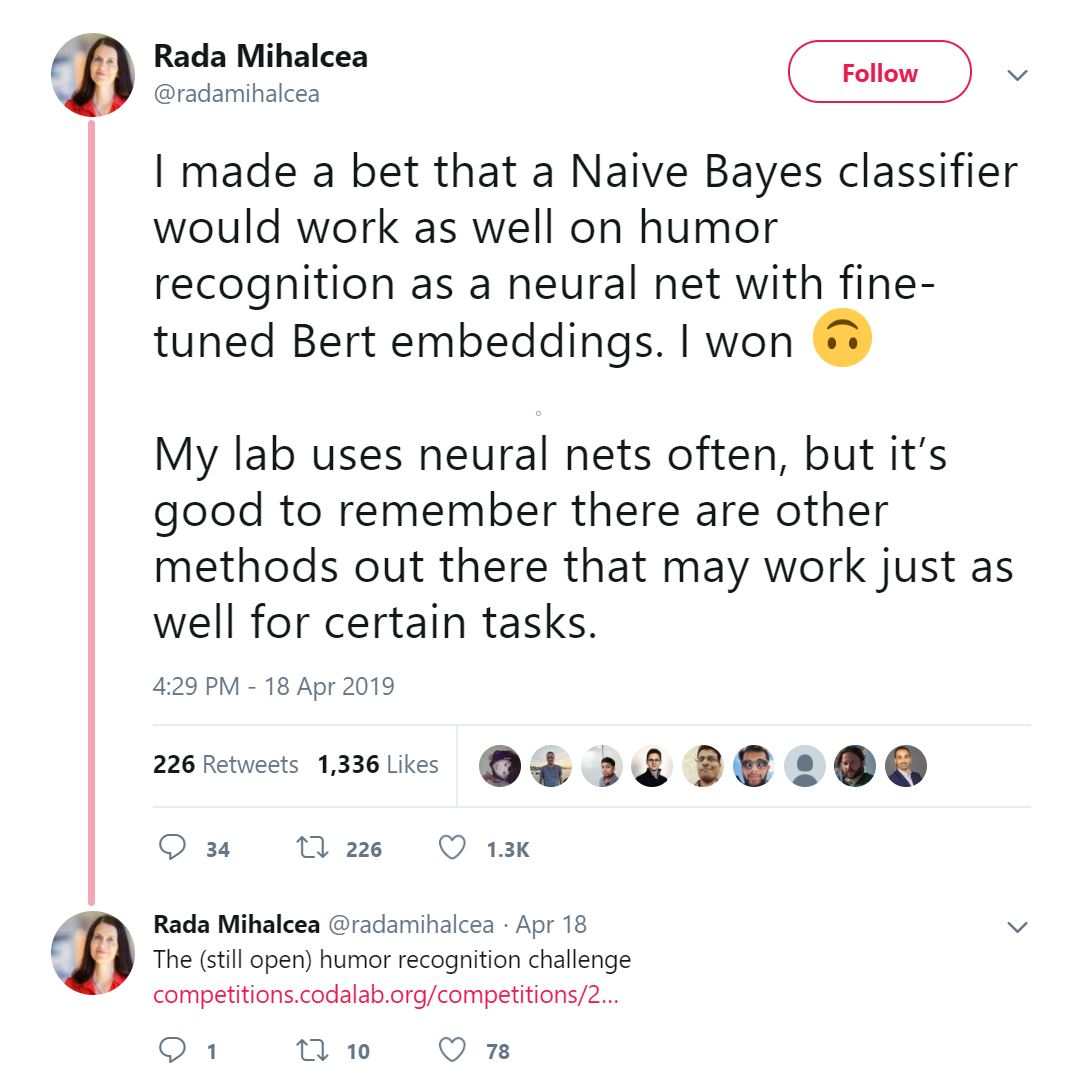

When this image of a tweet showed up in the Fastai forum, I had to give it a shot.

I have been tinkering around with sequence models of various kinds for a few years and this challenge was a great way to refine my knowledge and understanding of their application. From the posts in the forum, the game plan seemed straight forward; Establish the baseline for Naive Bayes, then beat it with ULMFiT.

I came in 3rd in classification and 2nd in the regression task. The top three teams all used some version of a pre-trained language model (LM) with a new “head” and some kind of ensemble to pick a model for the test set. Kevin Bird and Hiromi Suenaga explain their 2nd place entry here.

All the code for my solution is in my revised GitHub repo. Have a look!

The Fast.ai Forum Community

For almost a year now, I have been looking around on the fast.ai forums, helping to answer questions and submitting pull requests (PRs) to the core library. The text part of the library seemed particularly interesting to me so I dove in deep.

My journey started by replicating the Wiki103 pre-training to IMDB classification pipeline. I pulled the data and replicated the process but swapped out the SpaCy tokenizer for sentencepiece. From there, I had a full process baseline and developed an understanding of how all the parts all worked. As has been said many times in the forums, you don’t learn from watching the videos and reading the notebooks - you learn from building it all yourself.

No Habla Español

With the details of the competition and an understanding of my intended process I started to work through the data and building my classification pipeline. I don’t speak Spanish but I viewed this as an advantage since I could not engineer features. I had to apply the process and look at the metrics rather than trying to engineer a solution; The network had to find the answers. I followed method of transfer-learning from lesson 4/lesson 12 that is the basis of Universal Language Model Fine-tuning for Text Classification (ULMFiT).

- Pre-train a language model (LM) with a large corpus of text

- Fine-tune that LM on the text data for the competition

- Train a classifier on top of the back-bone LM

The whole process went in a loop with a selected LM design and corpus all the way to classification of the validation data set and then back to improving the LM training data and the LM model.

Building a Language Model

A language model (LM) embeds the structure of the language into the neural network. The architecture of this model becomes the “backbone” for the classifier you will eventually use. I experimented with several, but in the end I used the AWD_LSTM model with QRNN units as defined in fast.ai.

config = awd_lstm_lm_config.copy()

config['qrnn'] = True

config['n_hid'] = 2304

learn = language_model_learner(data, AWD_LSTM,

drop_mult=dropmult, pretrained=False,

config=config)

To train this LM, you use the prior information in the sequence of tokens to predict the next token. Often you have one token per word, but sometimes you can have sub-word units and you are predicting the next sub-word unit in a sequence. A sub-word units would be predict and ing. There are many words than end in ing so it is likely that ing is a sub-word unit in your vocabulary in English.

Whatever you chose for your LM architecture you need a corpus for training the LM and you want the vocabulary of that corpus to map onto your classifier training set as best as possible so that you don’t have words that are outside your stated vocabulary.

Building a Classifier

With a backbone defined, you now change the top of the model and can either create a classifier or a regression head. For the classifier, I had a label for all my data training and validation data but the classes were imbalanced. I used Synthetic Minority Oversampling Technique (SMOTE) to balance. I have found from other projects that this can help the model train much better than feeding in the imbalanced cases. For the regression, I only had data for the “humorous” tweets. I filled in the data for the non-humours tweets with zeros since I wanted them to get a low rating.

Selecting the LM Training Corpus

This process was iterative. I made a first attempt, tried to classify the data, then went back and improved my training corpus and continued to refine my LM to improve my model.

First Attempt: Wikipedia

I started with Spanish Wikipedia and tried to train an LM from there. Since I had a pipeline using sentencepiece, I used that for my tests. I took that LM and built a classifier for tweets from the competition (with no fine-tuning) and saw a lot of out of vocabulary tokens. That meant that the vocabulary coverage from Spanish Wikipedia did not overlap well with the Twitter vocabulary.

Even without speaking Spanish, I could see why:

- Jajajajajajaja xque todos me contestan ese motivo, tan obvio es todo (?😂😂😂😂

- Mi Espalda Me Quiere Matarrrrrrr😭😭

- ¡FELIZ NAVIDAD A TODOS! 🎄🎅🏻

- #FiebreDeViña Bose tiene cara de muerto, o se cree vampiro🎧

There are a lot of emoticons! There is a bunch of slang and other words that would not show up in Wikipedia entries. It was time to find a new LM corpus.

Second Attempt: Spanish Twitter

About a year ago, I had gotten fed up with how Twitter moved around the time sequence of my feed and tried to show me what I might like. So, I went to look for a command line twitter feed app and found tweepy and adapted that to my needs.

To collect a corpus for my LM, I pulled any tweet marked as Spanish with the terms ‘el’,’su’,’lo’,’y’ or ‘en’. I excluded re-tweets and put that all into a file. I ran the script over three 4-hour periods and got 475k tweets to train on.

When I used this new LM to train my classifier, I had less out of vocab words and I was able to use the emoticons as part of my classification. The resulting classifier did better than the one trained on Wikipedia. So I proceeded with other improvements.

Fine-tuning the model.

With my LM model corpus set I fine-tuned the weights of the LM by looking at the train and test text data. I did not expand or change the vocabulary from what I had in my original model, but I did fine-tune the LM so that it best predicts the next-word in the data that we are going to use for classification. This enhancement further boosted the performance of the resulting classifier. Again, reinforcing the idea that a better LM leads to a better final model.

Two more enhancements

Cross-validation

As the deadline for the competition neared, the more people posted on the forum thread. The first-place entrant talked about doing 5-fold cross validation. Up until this point, I had a single validation set and training set. With that kind of training, you are bound to over-fit for that particular validation set. With cross-validation, you will select 5 different validation sets and then can average the model outputs to create a model that is more robust over the future test data you will see.

Setting the seed

In working code for the core library, I had found setting the random seed to be very helpful. I wanted results that were identical so I could test out the code changes I was making. In talking with Piotr on the forums, he suggested using the seed as a hyper-parameter and sampling over many seeds to see which one would produce the best classification output. I combined this with my cross-validation and tested out 20 random seeds with 5-fold cross-validation on each one. The result was 20 robust models and I selected the best for my competition submission.

Conclusion

Being in a live competition pushed me to learn much more than I had just looking at notebooks and replicating outputs from scratch. when I fell from first place to second, I was really motivated to start to figure out other details and tweaks to improve my score. I learned a lot but more than that it was the contribution, suggestions and encouragement from the community that pulled me along to the finish line.